New Report Encourages Tech Companies to Prevent Image-Based Abuse

A new report, co-authored by College of Arts & Sciences assistant professor Elissa Redmiles, encourages large tech companies to combat image-based sexual abuse on their platforms.

“Our goal is to bridge the gap between those who are being harmed by image-based sexual abuse and the tech companies that own the sites where this harm occurs,” said Redmiles, who began teaching in the Department of Computer Science this fall. “This report surfaces a full suite of existing technical functionality that tech companies can roll out as a comprehensive set of intimacy settings on any platform.”

The report, which relies on interviews with more than 50 adults who have created or shared intimate images, combines an understanding of how users share sensitive images via tech platforms with the technical expertise needed to create systems that prevent abuse.

An Inadequate Security Apparatus

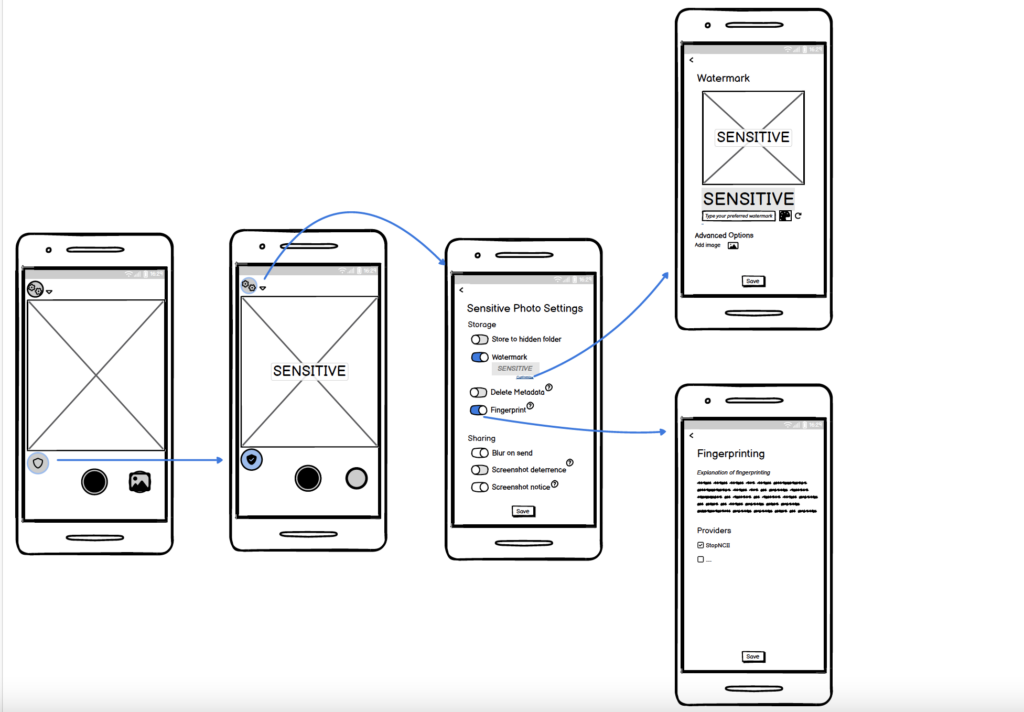

A wireframe used by the research team to model sensitive photo capture for tech companies.

Regardless of a platform’s intended use case, if images can be shared via a platform then companies should anticipate people using those features to share intimate images, according to the report’s authors.

“Among the 52 individuals that we interviewed for this report, we identified 40 different technologies used to share and store intimate content,” said Redmiles. “Not only should every technology company be thinking about this, but we need dedicated solutions for this kind of content.”

Many existing efforts to combat image-based sexual abuse focus on retroactive punishment. While Redmiles believes it’s important to hold abusers accountable, this approach is only half of the equation.

“When we spoke with nonprofit organizations — the folks who are on the ground helping people get their images taken down — we heard again and again that there is a lot of talk about justice and what to do after the fact, but none of that minimizes the occurrence of this happening in the first place,” said Redmiles. “This report and our recommendations for tech companies are about making safer technology platforms for everyone that can prevent abuse through robust safety settings.”

Many suggestions brought forward by the report, such as screenshot notifications and watermarking photos, are existing tech solutions that are simply not present on every platform. The report encourages tech companies to adopt these and a slew of other widely-available security solutions, like offering the option to automatically remove metadata, the information on where an image was taken and by whom, from the file before it’s shared on a platform.

Published by the European Sex Workers Rights Alliance (ESWA), the report was a result of a collaboration between researchers at ESWA, Brown University, the Max Planck Institute for Software Systems and the University of Washington.

Elissa Redmiles, an assistant professor in the Department of Computer Science.

Redmiles on the Hilltop

At Georgetown, Redmiles teaches a course on human-centered computer science, which she explains as the attempt to teach students to center the user when they build technology.

“The normative approach to building technology is telling people how they should use your platforms,” said Redmiles. “This is a vital component, but it doesn’t capture the descriptive nature of human behavior, which is how users actually behave on your platform.”

In her course, Redmiles urges Hoyas, who will be coding and programming the digital landscape of tomorrow, to think about how to build technology that satisfies both user needs and business realities. Part of the course involves students going through the full product lifecycle: from identifying a promising problem to investigating prospective user needs and building interactive product prototypes.

“We explore ways of collecting data from people,” said Redmiles. “Not only how they use technology, but their insight into what they want – laypeople can tell you a lot about how technology is and isn’t meeting their needs.”

Prior to joining the faculty at Georgetown, Redmiles was a faculty member at the Max Planck Institute for Software Systems and fellow at the Berkman Klein Center for Internet & Society at Harvard University. She has also served as a consultant and researcher at multiple institutions, including Microsoft Research, Facebook, the World Bank, the Center for Democracy and Technology and the Partnership on AI.

Redmiles’ other research interests include safety in digital labor and digitally-mediated offline interactions, community-based participatory research methods for cybersecurity and building transparency tools for privacy-enhancing technologies such as differential privacy. Next semester, she’s teaching a doctoral seminar on responsible computing.

-by Hayden Frye (C’17)